%mavenRepo scijava.public https://maven.scijava.org/content/groups/public

%maven org.janelia.saalfeldlab:n5-ij:4.0.2In this notebook, we will learn how to work with the N5 API and ImgLib2.

The N5 API unifies block-wise access to potentially very large n-dimensional data over a variety of storage backends. Those backends currently are the simple N5 format on the local filesystem, Google Cloud and AWS-S3, the HDF5 file format and Zarr. The ImgLib2 bindings use this API to make this data available as memory cached lazy cell images through ImgLib2.

This notebook uses code and data examples from the ImgLib2 large data tutorial I2K2020 workshop (GitHub repository).

First let’s add the necessary dependencies. We will load the n5-ij module which will transitively load ImgLib2 and all the N5 API modules that we will be using in this notebook. It will also load ImageJ which we will use to display data. If this is the first time you are loading dependencies, running this can take quite a while. Next time, everything will be cached though…

Next, we register a simple renderer that uses ImgLib2’s ImageJ bridge and Spencer Park’s image renderer to render the first 2D slice of a RandomAccessibleInterval into the notebook. We also add a renderer for arrays and maps, because we want to list directories and attributes maps later.

Code

import com.google.gson.*;

import io.github.spencerpark.jupyter.kernel.display.common.*;

import io.github.spencerpark.jupyter.kernel.display.mime.*;

import net.imglib2.img.display.imagej.*;

import net.imglib2.view.*;

import net.imglib2.*;

getKernelInstance().getRenderer().createRegistration(RandomAccessibleInterval.class)

.preferring(MIMEType.IMAGE_PNG)

.supporting(MIMEType.IMAGE_JPEG, MIMEType.IMAGE_GIF)

.register((rai, context) -> Image.renderImage(

ImageJFunctions.wrap(rai, rai.toString()).getBufferedImage(),

context));

getKernelInstance().getRenderer().createRegistration(String[].class)

.preferring(MIMEType.TEXT_PLAIN)

.supporting(MIMEType.TEXT_HTML, MIMEType.TEXT_MARKDOWN)

.register((array, context) -> Text.renderCharSequence(Arrays.toString(array), context));

getKernelInstance().getRenderer().createRegistration(long[].class)

.preferring(MIMEType.TEXT_PLAIN)

.supporting(MIMEType.TEXT_HTML, MIMEType.TEXT_MARKDOWN)

.register((array, context) -> Text.renderCharSequence(Arrays.toString(array), context));

getKernelInstance().getRenderer().createRegistration(Map.class)

.preferring(MIMEType.TEXT_PLAIN)

.supporting(MIMEType.TEXT_HTML, MIMEType.TEXT_MARKDOWN)

.register((map, context) -> Text.renderCharSequence(map.toString(), context));We will now open N5 datasets from some sources as lazy-loading ImgLib2 cell images. For opening the N5 readers, we will use the helper class N5Factory which parses the URL and/ or some magic byte in file headers to pick the right reader or writer for the various possible N5 backends. If you know which backend you are using, you should probably use the appropriate implementation directly, it’s not difficult.

import ij.*;

import net.imglib2.converter.*;

import net.imglib2.type.numeric.integer.*;

import org.janelia.saalfeldlab.n5.*;

import org.janelia.saalfeldlab.n5.ij.*;

import org.janelia.saalfeldlab.n5.imglib2.*;

import org.janelia.saalfeldlab.n5.universe.*;

/* make an N5 reader, we start with a public container on AWS S3 */

final var n5Url = "https://janelia-cosem.s3.amazonaws.com/jrc_hela-2/jrc_hela-2.n5";

final var n5Group = "/em/fibsem-uint16";

final var n5Dataset = n5Group + "/s4";

final var n5 = new N5Factory().openReader(n5Url);

/* open a dataset as a lazy loading ImgLib2 cell image */

final RandomAccessibleInterval<UnsignedShortType> rai = N5Utils.open(n5, n5Dataset);

/* This is a 3D volume, so let's show the center slice */

Views.hyperSlice(rai, 2, rai.dimension(2) / 2);

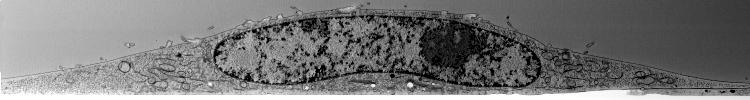

That’s a bit low on contrast, let’s make it look like TEM, and let’s show a few of those hyperslices through the 3D volume:

var raiContrast = Converters.convert(

rai,

(a, b) -> b.setReal(

Math.max(

0,

Math.min(

255,

255 - 255 * (a.getRealDouble() - 26000) / 6000))),

new UnsignedByteType());

display(Views.hyperSlice(raiContrast, 2, rai.dimension(2) / 10 * 4), "image/jpeg");

display(Views.hyperSlice(raiContrast, 2, rai.dimension(2) / 2), "image/jpeg");

display(Views.hyperSlice(raiContrast, 2, rai.dimension(2) / 10 * 6), "image/jpeg");

b3145473-3a3a-49a5-a392-9c5101325063We can list the attributes and their types of every group or dataset, and read any of them into matching types:

var groupAttributes = n5.listAttributes(n5Group);

var datasetAttributes = n5.listAttributes(n5Dataset);

display(

"**" + n5Group + "** attributes are ```" +

groupAttributes.toString().replace(", ", ",\n").replace("{", "{\n") + "```",

"text/markdown");

display(

"**" + n5Dataset + "** attributes are ```" +

datasetAttributes.toString().replace(", ", ",\n").replace("{", "{\n") + "```",

"text/markdown");

var n5Version = n5.getAttribute("/", "n5", String.class);

var dimensions = n5.getAttribute(n5Dataset, "dimensions", long[].class);

var compression = n5.getAttribute(n5Dataset, "compression", Compression.class);

var dataType = n5.getAttribute(n5Dataset, "dataType", DataType.class);

display(n5Version);

display(dimensions);

display(compression);

display(dataType);/em/fibsem-uint16 attributes are { pixelResolution=class java.lang.Object, multiscales=class [Ljava.lang.Object;, n5=class java.lang.String, scales=class [Ljava.lang.Object;, axes=class [Ljava.lang.String;, name=class java.lang.String, units=class [Ljava.lang.String;}

/em/fibsem-uint16/s4 attributes are { transform=class java.lang.Object, pixelResolution=class java.lang.Object, dataType=class java.lang.String, name=class java.lang.String, compression=class java.lang.Object, blockSize=class [J, dimensions=class [J}

2.0.0[750, 100, 398]org.janelia.saalfeldlab.n5.GzipCompression@78c84e2fuint1602cf0988-e2fc-483a-8286-9a4ca22aa8dbLet’s save the contrast adjusted uin8 version of the volume into three N5 supported containers (N5, Zarr, and HDF5), parallelize writing for N5 and Zarr:

import java.nio.file.*;

/* create a temporary directory */

Path tmpDir = Files.createTempFile("", "");

Files.delete(tmpDir);

Files.createDirectories(tmpDir);

var tmpDirStr = tmpDir.toString();

display(tmpDirStr);

/* get the dataset attributes (dataType, compression, blockSize, dimensions) */

final var attributes = n5.getDatasetAttributes(n5Dataset);

/* use 10 threads to parallelize copy */

final var exec = Executors.newFixedThreadPool(10);

/* save this dataset into a filsystem N5 container */

try (final var n5Out = new N5Factory().openFSWriter(tmpDirStr + "/test.n5")) {

N5Utils.save(

raiContrast,

n5Out,

n5Dataset,

attributes.getBlockSize(),

attributes.getCompression(),

exec);

}

/* save this dataset into a filesystem Zarr container */

try (final var zarrOut = new N5Factory().openZarrWriter(tmpDirStr + "/test.zarr")) {

N5Utils.save(

raiContrast,

zarrOut,

n5Dataset,

attributes.getBlockSize(),

attributes.getCompression(),

exec);

}

/* save this dataset into an HDF5 file, parallelization does not help here */

try (final var hdf5Out = new N5Factory().openHDF5Writer(tmpDirStr + "/test.hdf5")) {

N5Utils.save(

raiContrast,

hdf5Out,

n5Dataset,

attributes.getBlockSize(),

attributes.getCompression());

}

/* shot down the executor service */

exec.shutdown();

display(Files.list(tmpDir).map(a -> a.toString()).toArray(String[]::new));/tmp/12301290762951248139[/tmp/12301290762951248139/test.n5, /tmp/12301290762951248139/test.zarr, /tmp/12301290762951248139/test.hdf5]19331be9-edd2-413e-b2ad-47bc7627187bNow let us look at them and see if they all contain the same data:

try (final var n5 = new N5Factory().openReader(tmpDirStr + "/test.n5")) {

final RandomAccessibleInterval<UnsignedByteType> rai = N5Utils.open(n5, n5Dataset);

display(Views.hyperSlice(rai, 2, rai.dimension(2) / 2), "image/jpeg");

}

try (final var n5 = new N5Factory().openReader(tmpDirStr + "/test.zarr")) {

final RandomAccessibleInterval<UnsignedByteType> rai = N5Utils.open(n5, n5Dataset);

display(Views.hyperSlice(rai, 2, rai.dimension(2) / 2), "image/jpeg");

}

try (final var n5 = new N5Factory().openReader(tmpDirStr + "/test.hdf5")) {

final RandomAccessibleInterval<UnsignedByteType> rai = N5Utils.open(n5, n5Dataset);

display(Views.hyperSlice(rai, 2, rai.dimension(2) / 2), "image/jpeg");

}

Let’s clean up temporary storage before we end this tutorial.

try (var n5 = new N5Factory().openWriter(tmpDirStr + "/test.n5")) {

n5.remove();

}

try (var n5 = new N5Factory().openWriter(tmpDirStr + "/test.zarr")) {

n5.remove();

}

try (var n5 = new N5Factory().openWriter(tmpDirStr + "/test.hdf5")) {

n5.remove();

}

Files.delete(tmpDir);